Silicon IP Cores

White Paper — Firmware Compression for Lower Energy and Faster Boot in IoT Devices

October 20, 2015 by Dr. Nikos Zervas, CAST, Inc.

The phrase “IoT” for Internet of Things has exploded to cover a wide range of different applications and diverse devices with very different requirements. Most observers, however, would agree that low energy consumption is a key element for IoT, as many of these devices must run on batteries or harvest energy from the environment.

Looking at how IoT devices actually use energy, it is clear that most:

- Stay idle most of the time,

- Wake up periodically or in response to an event,

- Perform some kind of processing,

- Transmit the results, and

- Go back to sleep.

Step 2, booting or waking up, can be a significant power drain, and savings here can reduce the overall energy budget. In this article we will look at just that.

Energy Savings through Data Compression

Specifically, here we will show how GZIP data compression can help lower energy dissipation in embedded systems that use code shadowing, a common technique employed in IoT devices.

The basic idea is simple: on-the-fly decompression of previously compressed firmware reduces the data load and minimizes the number of accesses to long-term storage during boot or wake-up, hence reducing the energy (and the delay) during this critical phase of operation.

The possible energy and time-to-boot savings are proportional to the data compression level, which in turn depends on the compression algorithm and the code itself. Real-life examples we will explore here indicate that code size (and therefore power and time-to-boot) can be reduced as much as 50% using commercially available IP cores for hardware Deflate/GUNZIP decompression.

Furthermore, we will see that the savings much more than offset the extra resources used by building the right decompression core into the system.

Code Shadowing Versus Execute In Place

While they are in sleep mode, low-power embedded systems typically store their application code—and in some cases also application data—in a Non-Volatile Memory (NVM) device such as Flash, EPROM, or OTP.

When such systems wake up to perform their task, they run the application code via either of two methods:

- They fetch and execute the code directly from the NVM, called XIP for eXecute In Place, or

- They first copy the code to an on-chip SRAM unit, called the shadow memory, and execute it from there.

Which method is best depends on the access speed and access energy of the NVM memory. In general, NVM memories are significantly slower than on-chip SRAMs, and the energy cost of reading data from an NVM memory is much higher than reading the same data from an on-chip SRAM (especially when data are accessed in random order).

While using shadow memory seems best when considering the system’s active mode, the picture changes when we recall that an IoT device is usually asleep for most of its lifetime. Large on-chip SRAMs unfortunately suffer from leakage currents, and hence consume power even when in sleep mode, while most NVMs do not.

Designers therefore often chose code shadowing in cases where the shadow SRAM can be kept relatively small, or where the stringent real-time requirements make the slow access times of XIP unacceptable.

Lower-Power Code Shadowing via Fast Data Compression

We can address both issues—and steer the design decision towards the energy-saving code shadowing method—by reducing the size of the application code stored in the NVM.

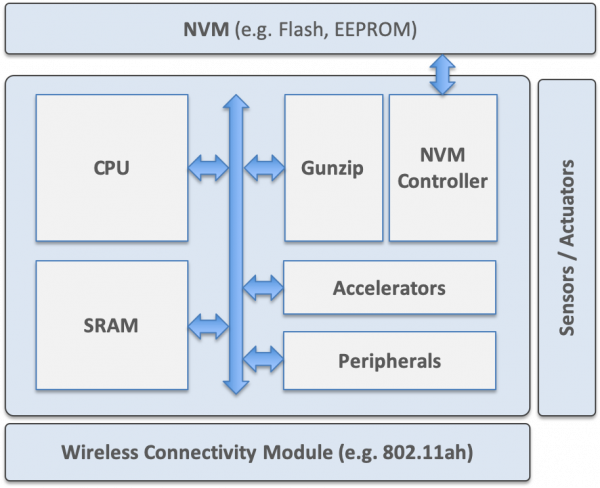

Compressing the code using a lossless algorithm such as GZIP achieves this, but means the code must be decompressed before execution. Figure 1 illustrates an example IoT system architecture that does this. Here the NVM controller connects to the SoC bus (and from there to the on-chips SRAM) via a decompression engine, like the GUNZIP IP core offered by CAST.

Storing compressed code means fewer energy-expensive NVM accesses are required for system wake-up, but now we have added the extra step of decompression with its own delays and energy consumption. Whether this is an overall good idea depends on:

- How much can we reduce the size of the application code, i.e., what is the achievable compression ratio, and

- What are the silicon area, power and latency requirements for the decompression hardware when we tune the compression algorithm settings to achieve a worthwhile compression ratio?

Let us next explore these factors by working through the numbers to see if the net energy savings of code shadowing using compression really provides a net energy savings.

Example Systems: How Much Energy is Really Saved?

Let us consider three IoT-like systems:

- In our first example, the R8051XC2 8051 microcontroller runs the Cygnal FreeRTOS port.

- In our second example, the BA22-DE processor runs a sensor control application with multiple threads managed by a FreeRTOS port.

- In our third example, a Cortex-M3 processor is running InterNiche Technologies’ demo of embedded TCP/IP and HTTP stacks.

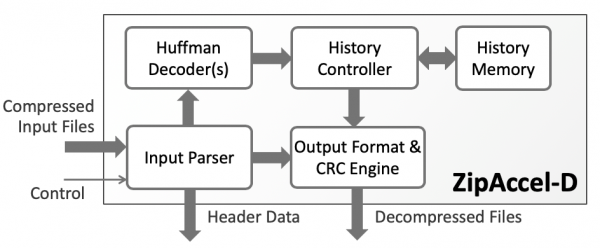

In all cases, we use the ZipAccel-D GUNZIP IP core to decompress the firmware as it is read out of a low-power serial Flash NVM memory.

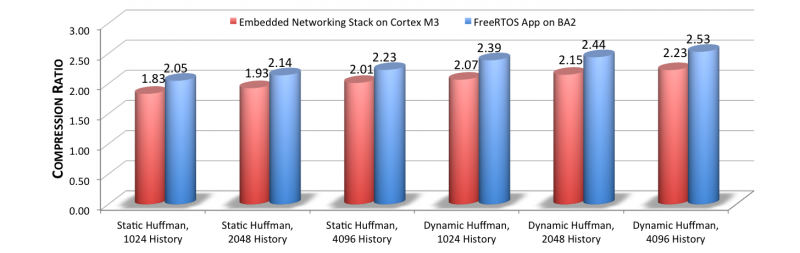

The energy savings depends on the compression level, which in turn depends on the compressibility of the code itself (a function of the ISA and the application) and the chosen GZIP parameters. The GZIP parameters most affecting the compression are the type of the Huffman engine and the size of the History. These parameters also affect the silicon requirements and latency of our GZIP engine.

The uncompressed code sizes for our applications are 25.5 Kbytes for the 8051 system, 161 KBytes for the BA2 system, and 985 KBytes for the Cortex-M3 system. Figure 3 shows the compression ratio for each of our example binaries and Table 1 the area and latency of our decompression core for different sets of GZIP parameters.

|

ZipAccel-D Configuration |

Area in kGates |

Memory in kBytes |

Latency in clock cycles |

|---|---|---|---|

| Static Huffman, 1024 History | 22 | 1.5 | 20 |

| Dynamic Huffman, 1024 History | 38 | 6.0 | ~1,500 |

| Static Huffman, 2048 History | 22 | 2.5 | 20 |

| Dynamic Huffman, 2048 History | 38 | 7.0 | ~1,500 |

| Static Huffman, 4096 History | 22 | 4.5 | 20 |

| Dynamic Huffman, 4096 History | 38 | 9.0 | ~1,500 |

To keep the GZIP processing latency and silicon overhead low, we will use Static Huffman tables and a 2048 History. This makes our compressed code about half the size of uncompressed code, and similarly cuts the NVM size for code storage as well as the time and energy required to read code out during boot or wake up. Table 2 and Table 3 summarize these savings, assuming modern low-power serial Flash NVMs with a 5mA read current and a 50MHZ read clock.

|

Code Size in kBytes |

Required NVM Size |

|||||

|---|---|---|---|---|---|---|

|

System #1 |

System #2 |

System #3 |

System #1 |

System #2 |

System #3 |

|

|

Uncompressed Code |

25.5 |

161 |

985 |

256kbits |

2Mbit |

8Mbit |

|

Compressed Code |

10.9 |

76 |

511 |

128kbits |

1Mbit |

4Mbits |

|

Savings |

57.25% |

52.80% |

48.12% |

50.00% |

50.00% |

50.00% |

|

Boot Time in msec |

Boot Power in mA x sec |

|||||

|---|---|---|---|---|---|---|

|

System #1 |

System #2 |

System #3 |

System #1 |

System #2 |

System #3 |

|

|

Uncompressed Code |

3.98 |

25 |

154 |

0.02 |

0.13 |

0.77 |

|

Compressed Code |

1.7 |

12 |

80 |

0.01 |

0.06 |

0.40 |

|

Savings |

57.29% |

52.00% |

48.05% |

57.25% |

52.80% |

48.12% |

The resource savings average about 50% and are clearly significant, but at what cost?

Analyzing Compression Overheads

Using compression in the manner described introduces overheads in two areas: time and energy.The ZipAccel-D decompression core we’re using in our example systems introduces a latency of 25 to 2000 clock cycles depending on whether static or dynamic Huffman tables are used for compression.

Even at 2000 cycles latency, and assuming that the decompression core would operate at the NVM’s 50MHz clock, the additional delay added by the decompression core is just is 0.04msec. So, the additional delay due to compression is practically negligible, since the time to just read out the code from the NVM is two orders of magnitude higher. On the energy side, the power usage of the decompression core is negligible while the system is active, but it also consumes energy while the system is idle. The significance of this idle-state power drain depends on the duty cycle of the system.

In our example systems, the idle power usage of the decompression core is 3 to 6 orders of magnitude lower than the power savings it enables. However, since energy is power over time, longer system sleep times make this extra power drain more important. With the huge power savings we achieve, it is clear that storing compressed code and decompressing it when needed yields a net system energy savings for most IoT systems, even those with a duty cycle as low as a few msec per day.

Conclusion

IoT devices that employ code shadowing can enjoy significant energy savings by using code compression. The compressed application code needs a smaller NVM device for long-term storage, and the system consumes significantly less time and energy reading the compressed code from the NVM into the on-chip SRAM. An efficient hardware decompression engine, like the IP core available from CAST, can decompress the code in-line (as it is read out of the NVM), at the cost of practically negligible additional delay or energy usage.